My friend was prompting Leonard AI to generate an image, and an error appeared telling him “content moderation filter.” He wondered, “What does that mean?”

You may have possibly experienced the same error as my friend Jude did.

What did you do to solve it?

That’s what we are going to explore today.

You will learn what it means, how to fix it, and more.

What Is Leonardo AI Content Moderation Filter

A content moderation filter is a machine learning technology that uses algorithms to decide the type of content that goes into or out of a platform.

It uses a filter to make sure that every content is in accordance with the platform’s guidelines or community standards.

Generative AI tools and some social platforms utilize content moderation filters to decide what type of content is displayed or used.

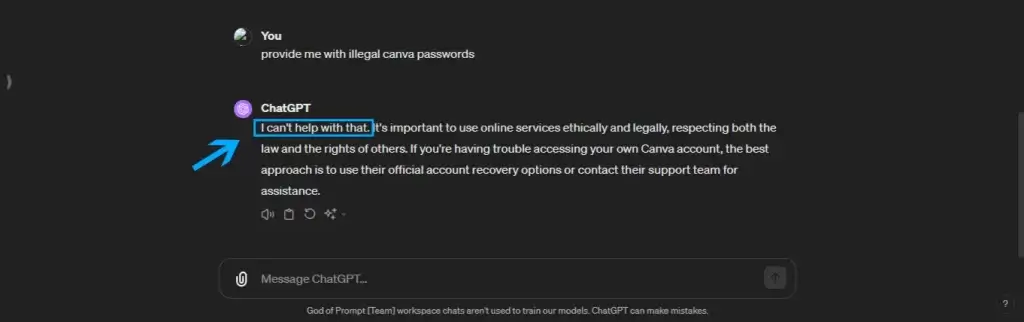

For example, there are some prompts that if you give ChatGPT or other generative AI tools, you’ll get a response similar to “I can’t help with that.”

In addition, social platforms like Facebook and X (formerly Twitter) use content moderation filters to classify the type of content a user posts on them and decide whether to show it to some users.

Similarly, Leonardo.ai, as an art generation tool, utilizes a content moderation filter to prevent inappropriate prompting and generation.

There are two levels of content filtering in Leonardo.ai:

1. Prompt-level filtering

In this type of filtering, a user’s prompt or instruction is scanned to ensure it’s in accordance with Leonardo.ai terms of use.

If the user uses an unacceptable word, they have to remove that word or rewrite the complete prompt to continue.

2. Generation or output-level filtering

Sometimes, the AI may not tell the user that a prompt is unacceptable.

But when the generation starts, and an inappropriate image is foreseen, the image will not be visible and users will be asked whether they want to see it anyway.

The NSFW Filter

When signing up for Leonardo.ai, you were asked to confirm whether you are 18 or older so you can allow NSFW content in your feed.

Leonardo.ai feed, unlike Facebook and Twitter feeds, contains images generated by other users.

When you turn on show NSFW content, you’ll see any content in the feed and generated images, even if it’s unsafe. When you don’t turn it on, you’ll have a safe feed you can always go through and all generated images will be safe.

NSFW stands for Not Safe For Work. Therefore, NSFW content is a type of content that may not be safe to work with.

How It Works

The Leonardo.ai content moderation filter uses a set of algorithms to understand the context of an instruction or image.

It then tries to check whether the instruction or the image to be generated is appropriate as per Leonardo.ai content guidelines.

If it concludes that it’s acceptable, then the generation process will continue as usual. Otherwise, the generation process will be stopped and the user will get an error notification that reads “content moderation filter.”

That notification means the user has used an unacceptable word or tried to generate an impermissible image.

Why Does Leonardo.Ai Use It

As you’ve read earlier, content moderation filters aren’t used by Leonardo.ai only; other platforms use content moderation filters of their choice as well.

Why does Leonardo.ai use such filters? Leonardo.ai was specific in stating why it uses a content moderation filter: “… to ensure the safety of our community.”

That is a common reason why other platforms also use content moderation filters. Suppose ChatGPT allows users to give any type of prompts they like and generates responses for all prompts, it will surely be exploited.

However, the question you might ask is, “Do users love this filter?” Users may find the filter boring sometimes. Why? Because AI tools are trained on a certain amount of data, they are likely to make mistakes. The filter may call your healthy word offensive and call an inappropriate word normal.

The Pros and Cons of Leonardo.ai Content Moderation Filter

Does this content moderation filter have some advantages and disadvantages? Let’s find out.

Advantages

1. Leonardo.ai content moderation filter makes the platform a welcoming one for all users irrespective of their age.

2. It protects the platform’s brand reputation.

3. It has a positive impact on society.

4. It ensures that users comply with the platform’s guidelines.

Disadvantages

1. It may misinterpret a harmless word as unacceptable, which may frustrate users.

2. As we all know AI isn’t flawless, it can classify a good picture as bad.

3. It can also classify a bad picture as good.

4. It may hinder a user’s creativity.

How to Avoid Content Moderation Filter Errors in Leonardo.Ai

To avoid such errors:

1. Use respectful language.

2. Don’t include any word that may go against the platform’s guidelines in your prompts.

3. Do not try to generate any image that may appear offensive to others.

Dos and Don’ts of Fixing Content Moderation Filter Errors

What do you do when you’re faced with a content moderation filter error? Here are some dos and don’ts.

Dos

1. Rewrite your prompt using better words.

2. Remove the word that may be considered inappropriate in the prompt and try again.

3. Use prompts that will eventually lead to the generation of appropriate images.

Don’ts

1. Don’t even think of using a manipulative method to generate an inappropriate image.

2. Don’t bypass the content moderation filter using some techniques. You’ll risk your account being suspended.

3. Don’t use some symbols to represent letters in your prompts. Some users use symbols like $ and @ to represent s and a, so they can include their inappropriate words in the prompt.

Wrapping Up

You have learned about Leonardo.ai content moderation filter.

You have also read how AI can be used to improve our community’s safety by ensuring that online content follows the respective platform’s guidelines. So AI has a good side too; it’s not just here to replace us all.

In the future, emerging platforms may continue to incorporate AI content moderation filters to control what type of content is shared and who consumes the content.

What is your experience with the content moderation filter? Let’s hear your story in the comment section.